Flink AI Flow

Introduction

Flink AI Flow is an open source framework that bridges big data and artificial intelligence. It manages the entire machine learning project lifecycle as a unified workflow, including feature engineering, model training, model evaluation, model service, model inference, monitoring, etc. Throughout the entire workflow, Flink is used as the general purpose computing engine.

In addition to the capability of orchestrating a group of batch jobs, by leveraging an event-based scheduler(enhanced version of Airflow), Flink AI Flow also supports workflows that contain streaming jobs. Such capability is quite useful for complicated real-time machine learning systems as well as other real-time workflows in general.

Features

You can use Flink AI Flow to do the following:

-

Define the machine learning workflow including batch/stream jobs.

-

Manage metadata(generated by the machine learning workflow) of date sets, models, artifacts, metrics, jobs etc.

-

Schedule and run the machine learning workflow.

-

Publish and subscribe events

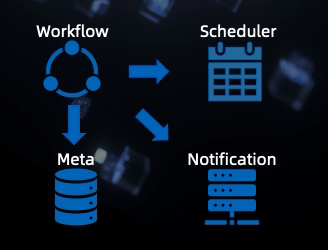

To support online machine learning scenarios, notification service and event-based schedulers are introduced. Flink AI Flow's current components are:

-

SDK: It defines how to build a machine learning workflow and includes the api of the Flink AI Flow.

-

Notification Service: It provides event listening and notification functions.

-

Meta Service: It saves the meta data of the machine learning workflow.

-

Event-Based Scheduler: It is a scheduler that triggered jobs by some events happened.

Documentation

QuickStart

You can use Flink AI Flow according to the guidelines of Quick Start. Besides, you can also take a look at our Tutorial for writing your own workflow.

API

Please refer to the API Documentation to find the API supported by Flink AI Flow.

Design

If you are interested in design principle of Flink AI Flow, please see the Design Documentation for more details.

Examples

You can refer to some examples of Flink AI Flow to have a better understanding of how to write a workflow. Please see the Examples directory.

Reporting bugs

If you encounter any issues please open an issue in github and we encourage you to provide a patch through github pull request as well.

Contributing

We happily welcome contributions to Flink AI Flow. Please see our contribution guide for details.

Contact Us

For more information, you can join the Flink AI Flow Users Group on DingTalk to contact us. The number of the DingTalk group is 35876083.

You can also join the group by scanning the QR code below: