Introduction

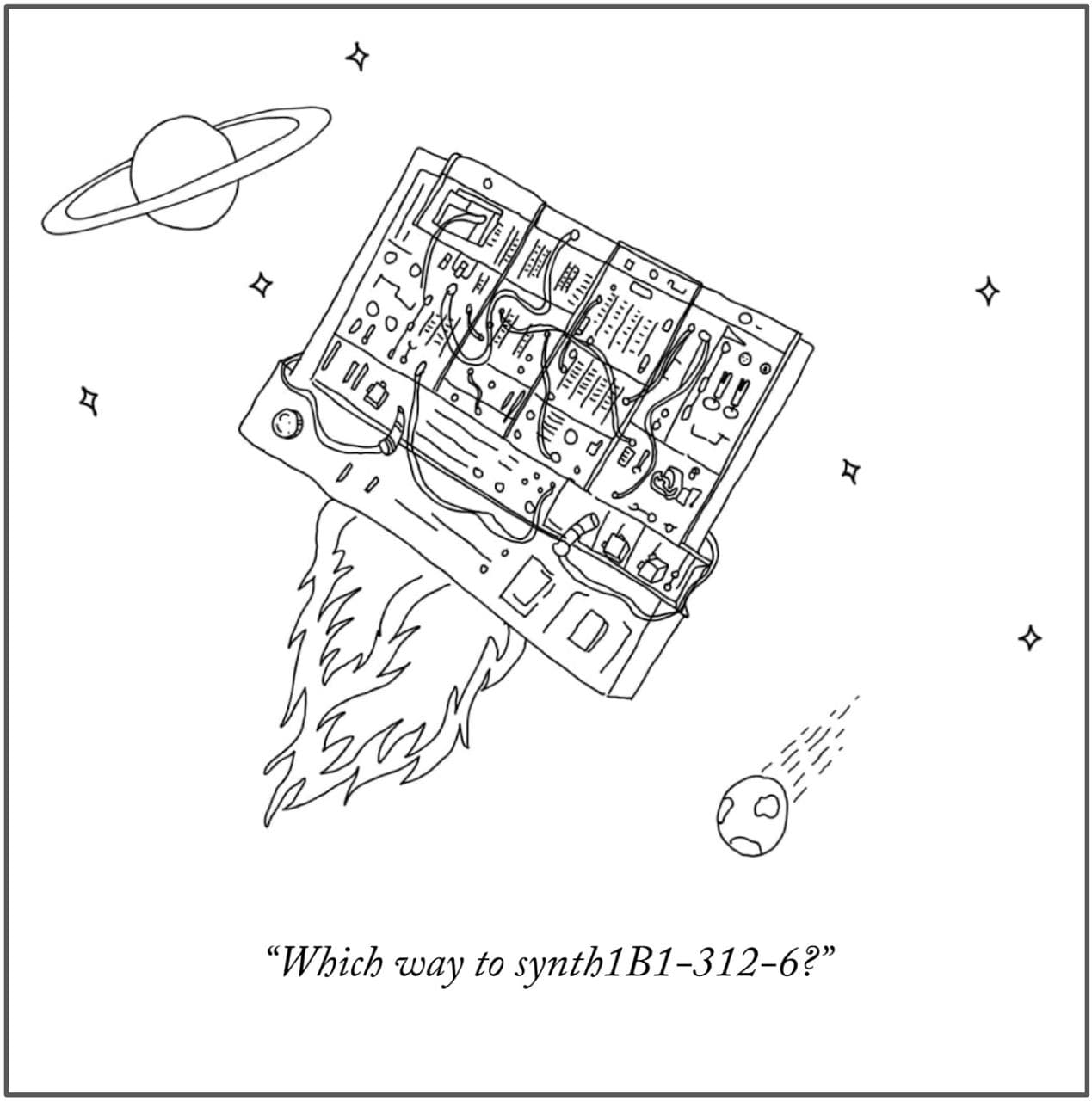

torchsynth is based upon traditional modular synthesis written in pytorch. It is GPU-optional and differentiable.

Most synthesizers are fast in terms of latency. torchsynth is fast in terms of throughput. It synthesizes audio 16200x faster than realtime (714MHz) on a single GPU. This is of particular interest to audio ML researchers seeking large training corpora.

Additionally, all synthesized audio is returned with the underlying latent parameters used for generating the corresponding audio. This is useful for multi-modal training regimes.

Installation

pip3 install torchsynth

Note that torchsynth requires PyTorch version 1.8 or greater.

Listen

If you'd like to hear torchsynth, check out synth1K1, a dataset of 1024 4-second sounds rendered from the Voice synthesizer, or listen on SoundCloud.

Citation

If you use this work in your research, please cite:

@inproceedings{turian2021torchsynth,

title = {One Billion Audio Sounds from {GPU}-enabled Modular Synthesis},

author = {Joseph Turian and Jordie Shier and George Tzanetakis and Kirk McNally and Max Henry},

year = 2021,

month = Sep,

booktitle = {Proceedings of the 23rd International Conference on Digital Audio Effects (DAFx2020)},

location = {Vienna, Austria}

}