CMaskTrack R-CNN for OVIS

This repo serves as the official code release of the CMaskTrack R-CNN model on the Occluded Video Instance Segmentation dataset described in the tech report:

Occluded Video Instance Segmentation

Jiyang Qi1,2*, Yan Gao2*, Yao Hu2, Xinggang Wang1, Xiaoyu Liu2,

Xiang Bai1, Serge Belongie3, Alan Yuille4, Philip Torr5, Song Bai2,5📧

1Huazhong University of Science and Technology 2Alibaba Group 3University of Copenhagen

4Johns Hopkins University 5University of Oxford

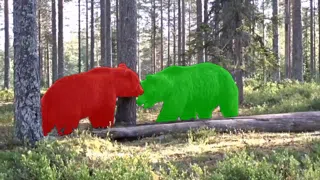

In this work, we collect a large-scale dataset called OVIS for Occluded Video Instance Segmentation. OVIS consists of 296k high-quality instance masks from 25 semantic categories, where object occlusions usually occur. While our human vision systems can understand those occluded instances by contextual reasoning and association, our experiments suggest that current video understanding systems cannot, which reveals that we are still at a nascent stage for understanding objects, instances, and videos in a real-world scenario.

We also present a simple plug-and-play module that performs temporal feature calibration to complement missing object cues caused by occlusion.

Some annotation examples can be seen below:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

For more details about the dataset, please refer to our paper or website.

Model training and evaluation

Installation

This repo is built based on MaskTrackRCNN. A customized COCO API for the OVIS dataset is also provided.

You can use following commands to create conda env with all dependencies.

conda create -n cmtrcnn python=3.6 -y

conda activate cmtrcnn

conda install -c pytorch pytorch=1.3.1 torchvision=0.2.2 cudatoolkit=10.1 -y

pip install -r requirements.txt

pip install git+https://github.com/qjy981010/cocoapi.git#"egg=pycocotools&subdirectory=PythonAPI"

bash compile.sh

Data preparation

- Download OVIS from our website.

- Symlink the train/validation dataset to

data/OVIS/folder. Put COCO-style annotations underdata/annotations.

mmdetection

├── mmdet

├── tools

├── configs

├── data

│ ├── OVIS

│ │ ├── train_images

│ │ ├── valid_images

│ │ ├── annotations

│ │ │ ├── annotations_train.json

│ │ │ ├── annotations_valid.json

Training

Our model is based on MaskRCNN-resnet50-FPN. The model is trained end-to-end on OVIS based on a MSCOCO pretrained checkpoint (mmlab link or google drive).

Run the command below to train the model.

CUDA_VISIBLE_DEVICES=0,1,2,3 python train.py configs/cmasktrack_rcnn_r50_fpn_1x_ovis.py --work_dir ./workdir/cmasktrack_rcnn_r50_fpn_1x_ovis --gpus 4

For reference to arguments such as learning rate and model parameters, please refer to configs/cmasktrack_rcnn_r50_fpn_1x_ovis.py.

Evaluation

Our pretrained model is available for download at Google Drive (comming soon). Run the following command to evaluate the model on OVIS.

CUDA_VISIBLE_DEVICES=0 python test_video.py configs/cmasktrack_rcnn_r50_fpn_1x_ovis.py [MODEL_PATH] --out [OUTPUT_PATH.pkl] --eval segm

A json file containing the predicted result will be generated as OUTPUT_PATH.pkl.json. OVIS currently only allows evaluation on the codalab server. Please upload the generated result to codalab server to see actual performances.

License

This project is released under the Apache 2.0 license, while the correlation ops is under MIT license.

Acknowledgement

This project is based on mmdetection (commit hash f3a939f), mmcv, MaskTrackRCNN and Pytorch-Correlation-extension. Thanks for their wonderful works.

Citation

If you find our paper and code useful in your research, please consider giving a star

@article{qi2021occluded,

title={Occluded Video Instance Segmentation},

author={Jiyang Qi and Yan Gao and Yao Hu and Xinggang Wang and Xiaoyu Liu and Xiang Bai and Serge Belongie and Alan Yuille and Philip Torr and Song Bai},

journal={arXiv preprint arXiv:2102.01558},

year={2021},

}