Website • Docs • Blog • LinkedIn • Community Slack

ML powered analytics engine for outlier detection and root cause analysis

✨

What is Chaos Genius?

Chaos Genius is an open source ML powered analytics engine for outlier detection and root cause analysis. Chaos Genius can be used to monitor and analyse high dimensionality business, data and system metrics at scale.

Using Chaos Genius, users can segment large datasets by key performance metrics (e.g. Daily Active Users, Cloud Costs, Failure Rates) and important dimensions (e.g., countryID, DeviceID, ProductID, DayofWeek) across which they want to monitor and analyse the key metrics.

Use Chaos Genius if you want:

- Multidimensional Drill Downs & Insights

- Anomaly Detection

- Smart Alerting

- Seasonality Detection*

- Automated Root Cause Analysis*

- Forecasting*

- What-If Analysis*

*in Short and Medium-term Roadmap

Demo

To try it out, check out our Demo. Or explore live dashboards for:

- E-commmerce

- Music

- Ride-Hailing

- Cloud Monitoring

- Data Quality (coming soon)

⚙️

Quick Start

git clone https://github.com/chaos-genius/chaos_genius

cd chaos_genius

docker-compose up

Visit http://localhost:8080

Follow this Quick Start guide or read our Documentation for more details.

💫

Key Features

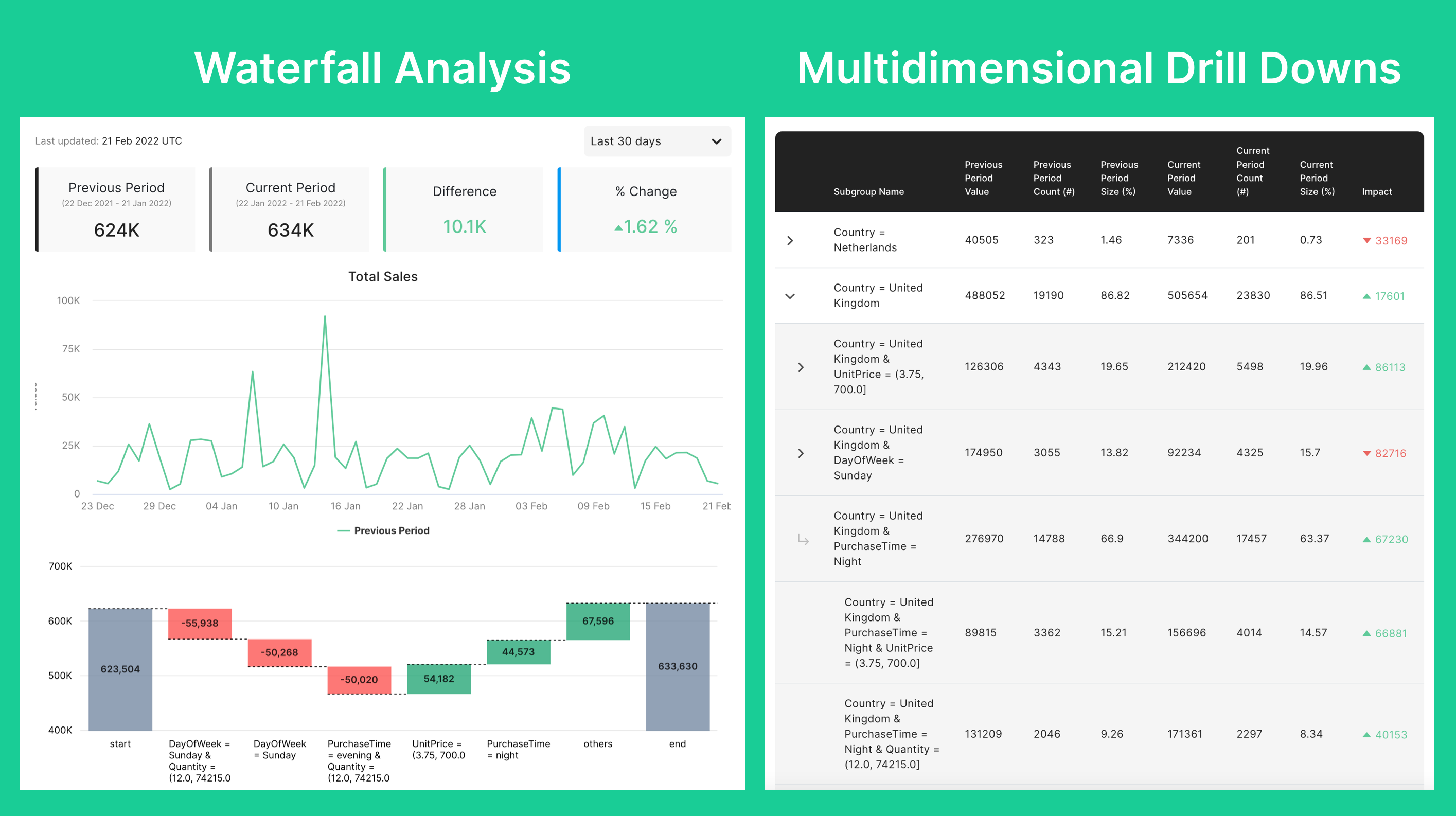

1. Automated DeepDrills

Generate multidimensional drilldowns to identify the key drivers of change in defined metrics (e.g. Sales) across a large number of high cardinality dimensions (e.g. CountryID, ProductID, BrandID, Device_type).

- Techniques: Statistical Filtering, A* like path based search to deal with combinatorial explosion

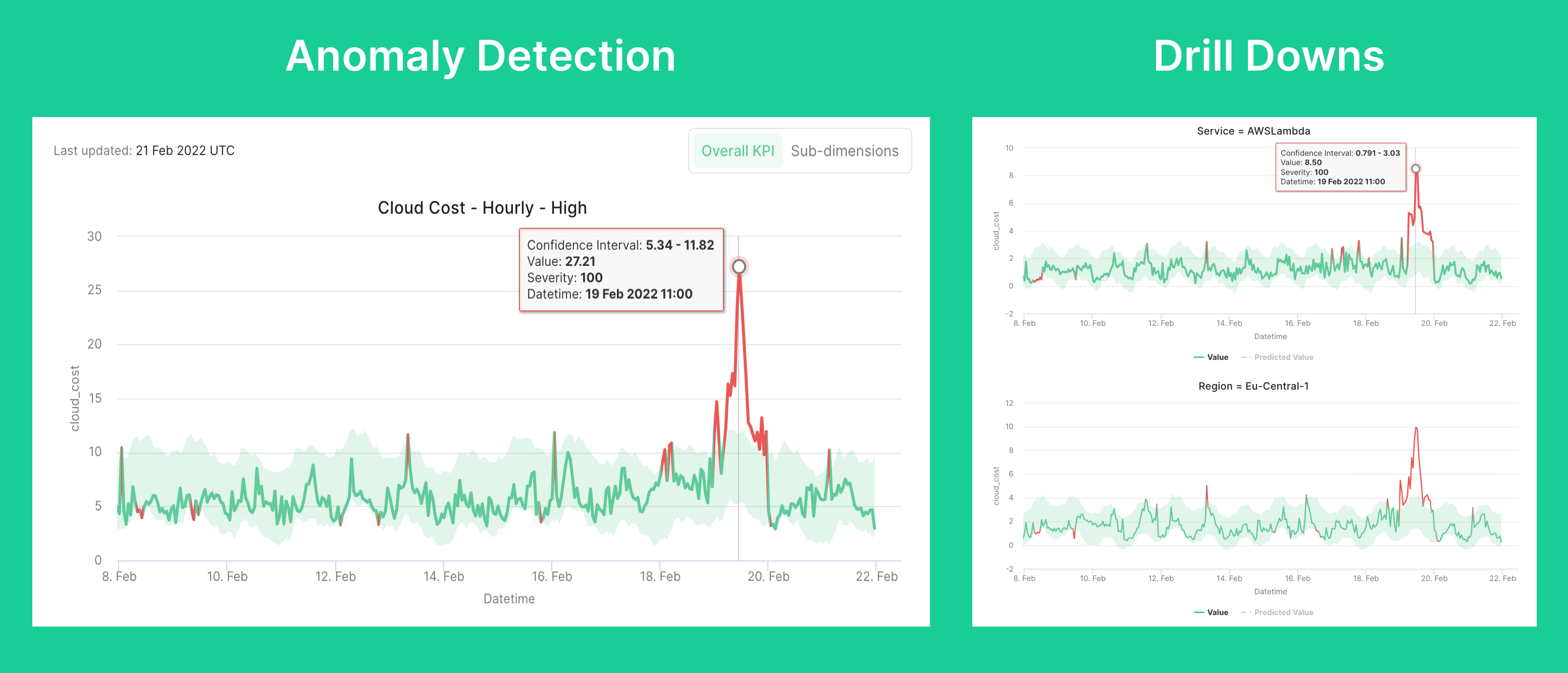

2. Anomaly Detection

Modular anomaly detection toolkit for monitoring high-dimensional time series with ability to select from different models. Tackle variations caused by seasonality, trends and holidays in the time series data.

- Models: Prophet, EWMA, EWSTD, Neural Prophet, Greykite

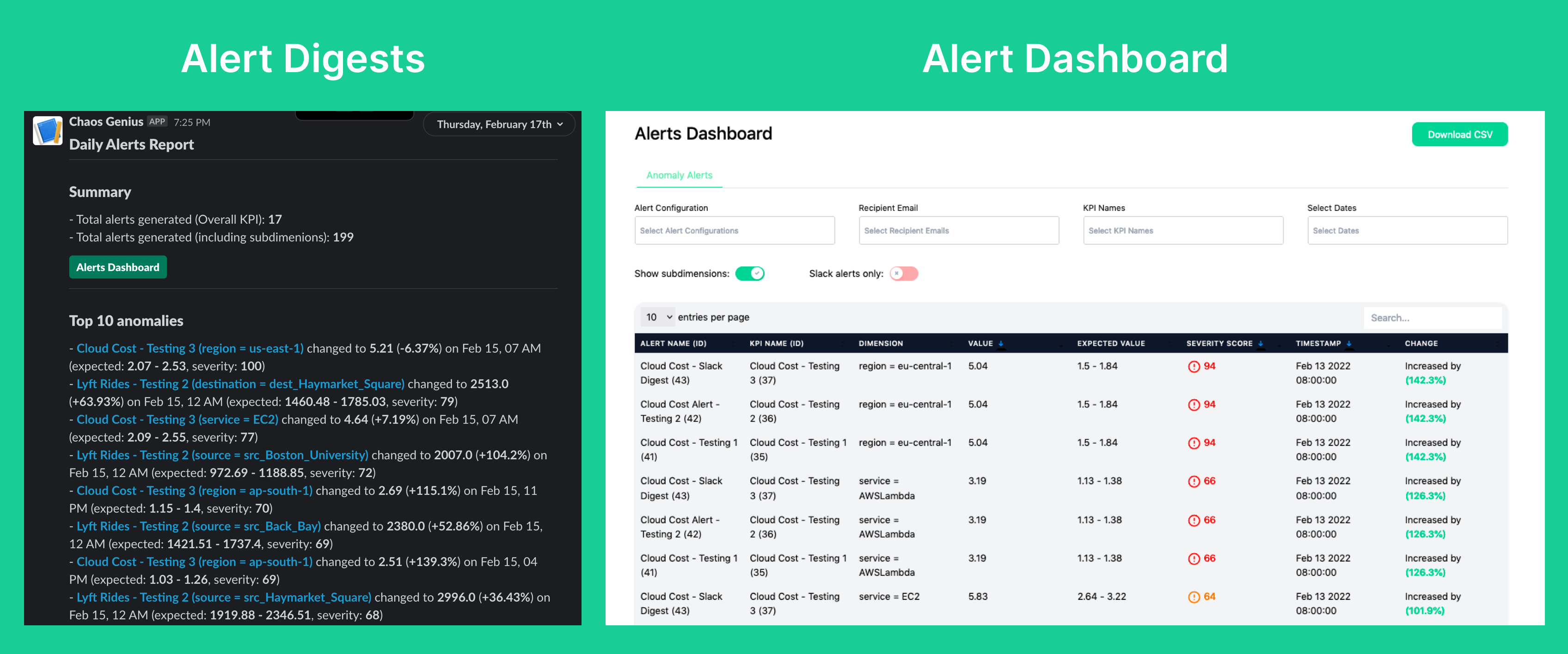

3. Smart Alerts

Actionable alerts with self-learning thresholds. Configurations to setup alert frequency & reporting to combat alert fatigue.

- Channels: Email, Slack

Community

Community

For any help, discussions and suggestions feel free to reach out to the Chaos Genius team and the community here:

-

GitHub (report bugs, contribute, follow roadmap)

-

Slack (discuss with the community and Chaos Genius team)

-

Book Office Hours (set up time with the Chaos Genius team for any questions or help with setup)

-

Blog (follow us on latest trends on Data, Machine Learning, Open Source and more)

🚦

Roadmap

Our goal is to make Chaos Genius production ready for all organisations irrespective of their data infrasturcture, data sources and scale requirements. With that in mind we have created a roadmap for Chaos Genius. If you see something missing or wish to make suggestions, please drop us a line on our Community Slack or raise an issue.

🌱

Contributing

Want to contribute? Get started with:

-

Show us some love - Give us a

🌟 ! -

Submit an issue.

-

Share a part of the documentation that you find difficult to follow.

-

Create a pull request. Here's a list of issues to start with. Please review our contribution guidelines before opening a pull request. Thank you for contributing!

❤️

Contributors

Thanks goes to these wonderful people (emoji key):

This project follows the all-contributors specification. Contributions of any kind welcome!

📜

License

Chaos Genius is licensed under the MIT license.