PART 2: CHAIN LINKING AUDIO-TO-TEXT NLP TASKS

2A: TRANSCRIBE-TRANSLATE-SENTIMENT-ANALYSIS

In notebook3.0, I demo a simple workflow to:

- transcribe a longish English speech (~24 minutes)

- translate it into Chinese

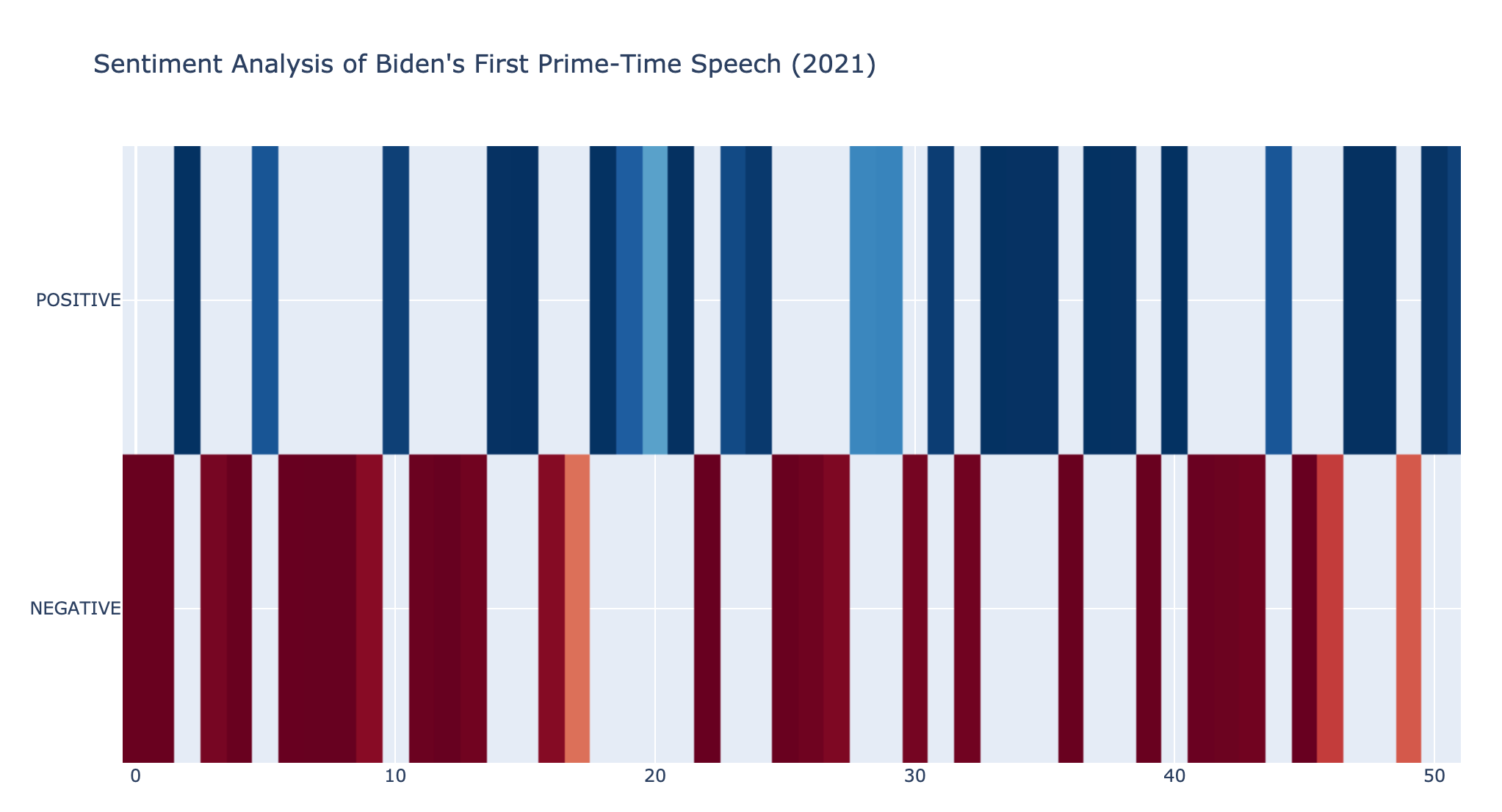

- plot the 'sentiment structure' of the Engish speech.

I used Biden's first prime time speech on Mar 11/12 2021 (depending on which time zone you are in). The audio clip was split in 71 20-second clips.

Results are a bit rough, but it's interesting that you can do this in 1 go (and in 1 notebook) these days. Future possibilities are interesting to say the least.

Note:

Code was updated on Mar 18 2021 for a cleaner approach.

2B: TRANSCRIBE-SUMMARISE

In notebook3.1, I demo a simple workflow to:

- transcribe a short English speech (4 minutes)

- summarize it via FB/Bart or Google/Pegasus

Summarisation is one of the toughest NLP tasks to get right, so I used a shorter audio file - a 4-minute clip by Singapore Prime Minister Lee Hsien Loong talking about populism.

MEDIUM

A short write up on the results in this Medium post.

PART 1: TRANSCRIBING POETRY AND SPEECHES WITH WAV2VEC2

This series of notebooks is aimed at helping fellow NLP enthusiasts experiment with the Wav2Vec2 model by FB and implemented in transformers by Hugging Face.

I was curious to see how well the model would perform for short and long audio clips, different accents and different "delivery formats" - be it formal speeches or a poetry recital. The accents in these audio clips involve speakers who are: White American, African American and Singaporean Chinese.

-

Notebook 1.0: This is the simplest trial of the Wav2Vec2 model, involving a 62s clip of John F Kennedy's famous inaugural speech in 1961.

-

2.0: Longer audio clips tend to crash notebooks using the Wav2Vec2 model, so I used a work around to transcribe Amanda Gorman's evocative inauguration poem (5 minutes 34 seconds)

-

2.1: Colab notebook to transcribe a 12.5 minutes speech by the Singapore Prime Minister, to see how the model deals with an Asian accent.

-

2.2: Notebook with revised and cleaner code for dealing with longer audio files.

The necessary audio files are included in this repo. If you want to use your own clips, make sure to downsample them to 16kHz.

MEDIUM

A short write up on the results in this Medium post.