Hello, world!

👋

We've rebuilt data engineering for the data science era.

Prefect is a new workflow management system, designed for modern infrastructure and powered by the open-source Prefect Core workflow engine. Users organize Tasks into Flows, and Prefect takes care of the rest.

Read the docs; get the code; ask us anything!

Welcome to Workflows

Prefect's Pythonic API should feel familiar for newcomers. Mark functions as tasks and call them on each other to build up a flow.

from prefect import task, Flow, Parameter

@task(log_stdout=True)

def say_hello(name):

print("Hello, {}!".format(name))

with Flow("My First Flow") as flow:

name = Parameter('name')

say_hello(name)

flow.run(name='world') # "Hello, world!"

flow.run(name='Marvin') # "Hello, Marvin!"

For more detail, please see the Core docs

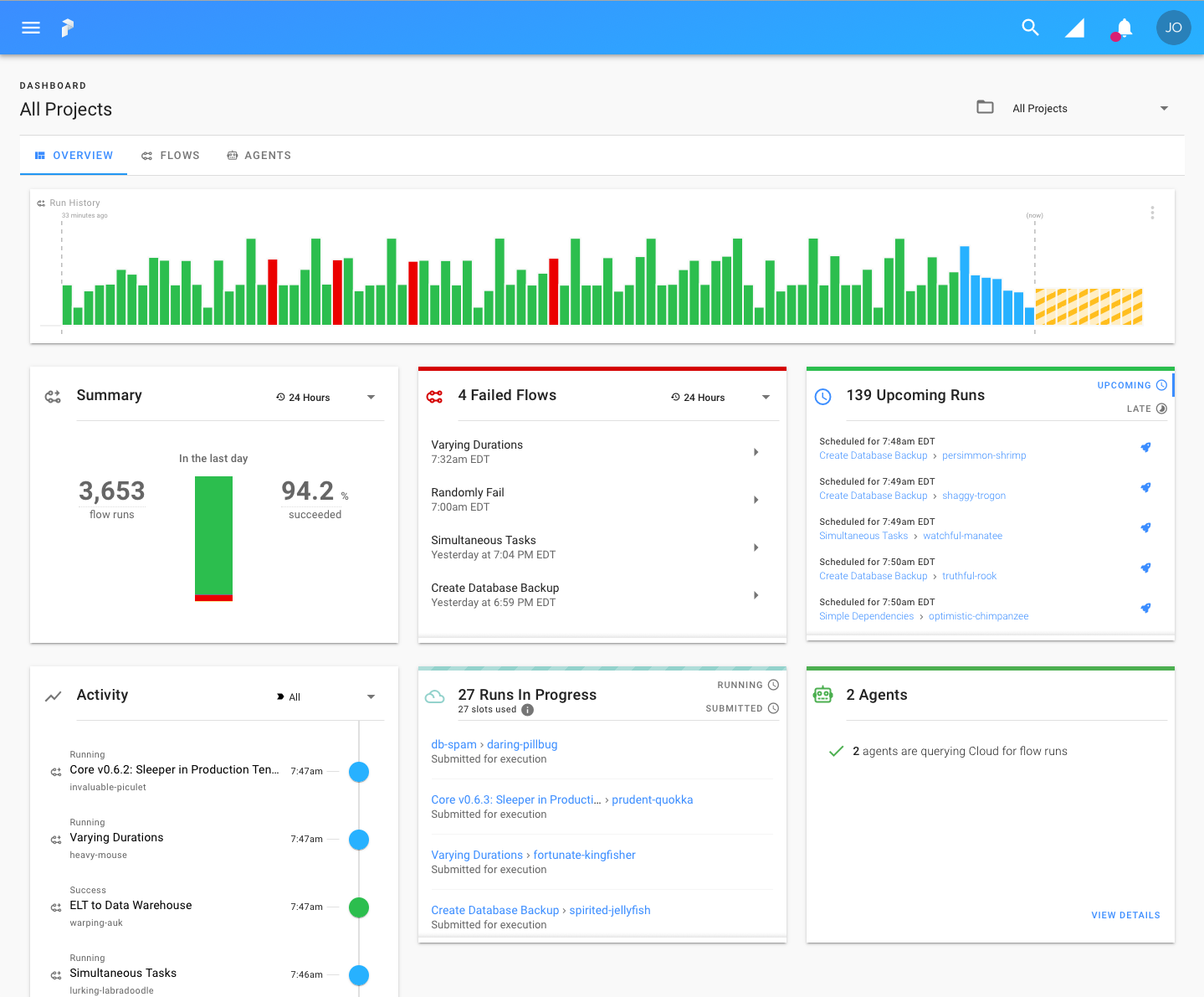

UI and Server

In addition to the Prefect Cloud platform, Prefect includes an open-source backend for orchestrating and managing flows, consisting primarily of Prefect Server and Prefect UI. This local server stores flow metadata in a Postgres database and exposes a GraphQL API.

Before running the server for the first time, run prefect backend server to configure Prefect for local orchestration. Please note the server requires Docker and Docker Compose to be running.

To start the server, UI, and all required infrastructure, run:

prefect server start

Once all components are running, you can view the UI by visiting http://localhost:8080.

Please note that executing flows from the server requires at least one Prefect Agent to be running: prefect agent local start.

Finally, to register any flow with the server, call flow.register(). For more detail, please see the orchestration docs.

"...Prefect?"

From the Latin praefectus, meaning "one who is in charge", a prefect is an official who oversees a domain and makes sure that the rules are followed. Similarly, Prefect is responsible for making sure that workflows execute properly.

It also happens to be the name of a roving researcher for that wholly remarkable book, The Hitchhiker's Guide to the Galaxy.

Integrations

Thanks to Prefect's growing task library and deep ecosystem integrations, building data applications is easier than ever.

Something missing? Open a feature request or contribute a PR! Prefect was designed to make adding new functionality extremely easy, whether you build on top of the open-source package or maintain an internal task library for your team.

Task Library

Deployment & Execution

Azure |

AWS |

Dask |

Docker |

Google Cloud |

Kubernetes |

Universal Deploy |

Resources

Prefect provides a variety of resources to help guide you to a successful outcome.

We are committed to ensuring a positive environment, and all interactions are governed by our Code of Conduct.

Documentation

Prefect's documentation -- including concepts, tutorials, and a full API reference -- is always available at docs.prefect.io.

Instructions for contributing to documentation can be found in the development guide.

Slack Community

Join our Slack to chat about Prefect, ask questions, and share tips.

Blog

Visit the Prefect Blog for updates and insights from the Prefect team.

Support

Prefect offers a variety of community and premium support options for users of both Prefect Core and Prefect Cloud.

Contributing

Read about Prefect's community or dive in to the development guides for information about contributions, documentation, code style, and testing.

Installation

Requirements

Prefect requires Python 3.6+. If you're new to Python, we recommend installing the Anaconda distribution.

Latest Release

To install Prefect, run:

pip install prefect

or, if you prefer to use conda:

conda install -c conda-forge prefect

or pipenv:

pipenv install --pre prefect

Bleeding Edge

For development or just to try out the latest features, you may want to install Prefect directly from source.

Please note that the master branch of Prefect is not guaranteed to be compatible with Prefect Cloud or the local server.

git clone https://github.com/PrefectHQ/prefect.git

pip install ./prefect

License

Prefect Core is licensed under the Apache Software License Version 2.0. Please note that Prefect Core includes utilities for running Prefect Server and the Prefect UI, which are themselves licensed under the Prefect Community License.