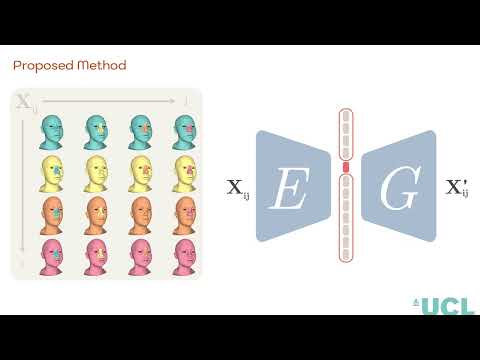

3D Shape Variational Autoencoder Latent Disentanglement via Mini-Batch Feature Swapping for Bodies and Faces

After cloning the repo open a terminal and go to the project directory.

Change the permissions of install_env.sh by running chmod +x ./install_env.sh

and run it with:

./install_env.shThis will create a virtual environment with all the necessary libraries.

Note that it was tested with Python 3.8, CUDA 10.1, and Pytorch 1.7.1. The code should work also with newer versions of Python, CUDA, and Pytorch. If you wish to try running the code with more recent versions of these libraries, change the CUDA, TORCH, and PYTHON_V variables in install_env.sh

Then activate the virtual environment :

source ./id-generator-env/bin/activateTo obtain access to the UHM models and generate the dataset, please follow the instructions on the github repo of UHM.

Data will be automatically generated from the UHM during the first training.

In this case the training must be launched with the argument --generate_data

(see below).

We made available a configuration file for each experiment (default.yaml is

the configuration file of the proposed method). Make sure

the paths in the config file are correct. In particular, you might have to

change pca_path according to the location where UHM was downloaded.

To start the training from the project repo simply run:

python train.py --config=configurations/<A_CONFIG_FILE>.yaml --id=<NAME_OF_YOUR_EXPERIMENT>If this is your first training and you wish to generate the data, run:

python train.py --generate_data --config=configurations/<A_CONFIG_FILE>.yaml --id=<NAME_OF_YOUR_EXPERIMENT>Basic tests will automatically run at the end of the training. If you wish to

run additional tests presented in the paper you can uncomment any function call

at the end of test.py. If your model has alredy been trained or you are using

our pretrained model, you can run tests without training:

python test.py --id=<NAME_OF_YOUR_EXPERIMENT>Note that NAME_OF_YOUR_EXPERIMENT is also the name of the folder containing the pretrained model.

We make available the files storing:

- the precomputed down- and up-sampling transformation

- the precomputed spirals

- the mesh template with the face regions

- the network weights

@inproceedings{foti20223d,

title={3D Shape Variational Autoencoder Latent Disentanglement via Mini-Batch Feature Swapping for Bodies and Faces},

author={Foti, Simone and Koo, Bongjin and Stoyanov, Danail and Clarkson, Matthew J},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={18730--18739},

year={2022}

}