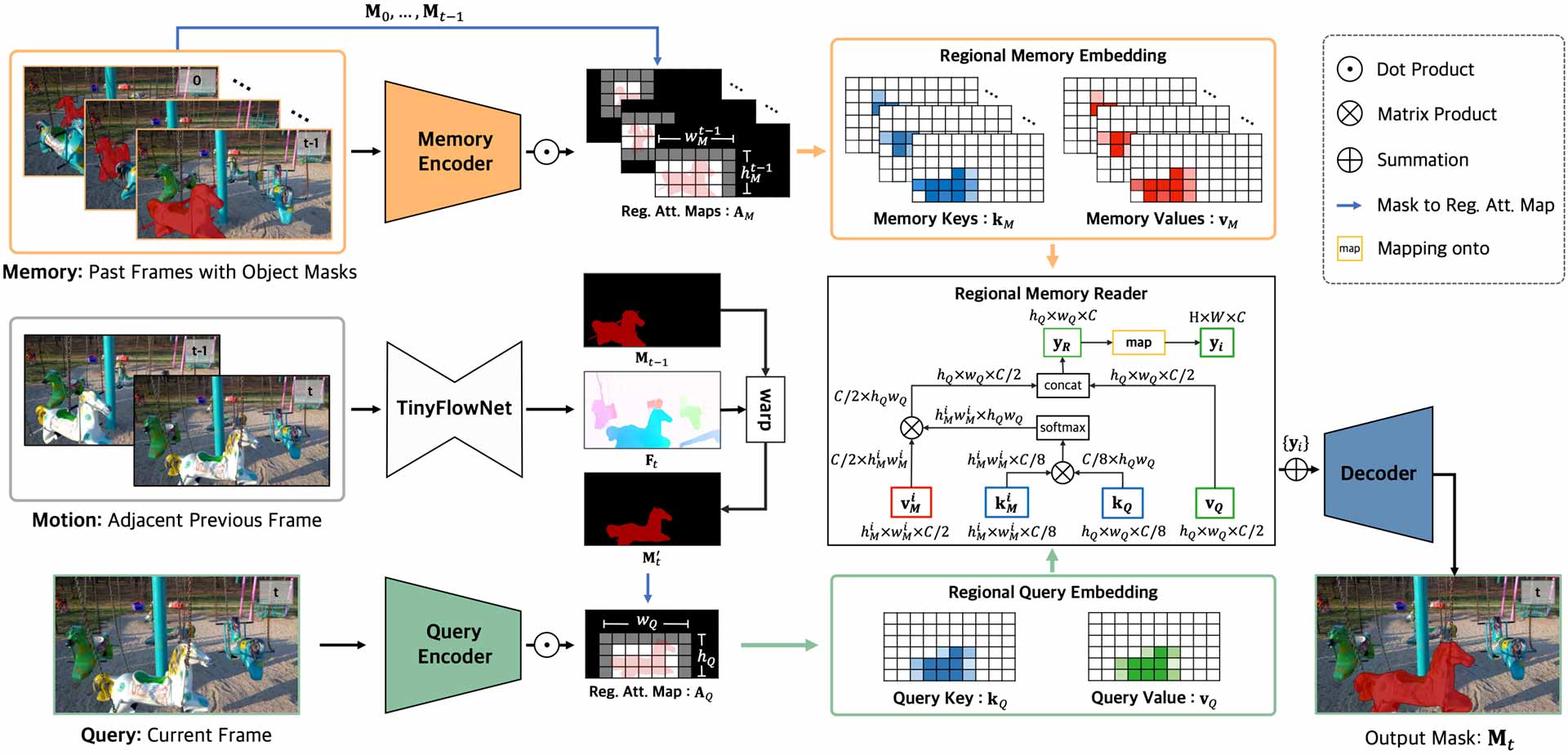

This repository contains the source code for the paper Efficient Regional Memory Network for Video Object Segmentation.

@inproceedings{xie2021efficient,

title={Efficient Regional Memory Network for Video Object Segmentation},

author={Xie, Haozhe and

Yao, Hongxun and

Zhou, Shangchen and

Zhang, Shengping and

Sun, Wenxiu},

booktitle={CVPR},

year={2021}

}

We use the ECSSD, COCO, PASCAL VOC, MSRA10K, DAVIS, and YouTube-VOS datasets in our experiments, which are available below:

- ECSSD Images / Masks

- COCO Images / Masks

- PASCAL VOC

- MSRA10K

- DAVIS 2017 Train/Val

- DAVIS 2017 Test-dev

- YouTube-VOS

The pretrained models for DAVIS and YouTube-VOS are available as follows:

- RMNet for DAVIS (202 MB)

- RMNet for YouTube-VOS (202 MB)

git clone https://github.com/hzxie/RMNet.git

cd RMNet

pip install -r requirements.txt

NOTE: PyTorch >= 1.4, CUDA >= 9.0 and GCC >= 4.9 are required.

RMNET_HOME=`pwd`

cd $RMNET_HOME/extensions/reg_att_map_generator

python setup.py install --user

cd $RMNET_HOME/extensions/flow_affine_transformation

python setup.py install --user

- For the DAVIS dataset, the optical flows are computed by FlowNet2-CSS with the model pretrained on FlyingThings3D.

- For the YouTube-VOS dataset, the optical flows are computed by RAFT with the model pretrained on Sintel.

You need to update the file path of the datasets:

__C.DATASETS = edict()

__C.DATASETS.DAVIS = edict()

__C.DATASETS.DAVIS.INDEXING_FILE_PATH = './datasets/DAVIS.json'

__C.DATASETS.DAVIS.IMG_FILE_PATH = '/path/to/Datasets/DAVIS/JPEGImages/480p/%s/%05d.jpg'

__C.DATASETS.DAVIS.ANNOTATION_FILE_PATH = '/path/to/Datasets/DAVIS/Annotations/480p/%s/%05d.png'

__C.DATASETS.DAVIS.OPTICAL_FLOW_FILE_PATH = '/path/to/Datasets/DAVIS/OpticalFlows/480p/%s/%05d.flo'

__C.DATASETS.YOUTUBE_VOS = edict()

__C.DATASETS.YOUTUBE_VOS.INDEXING_FILE_PATH = '/path/to/Datasets/YouTubeVOS/%s/meta.json'

__C.DATASETS.YOUTUBE_VOS.IMG_FILE_PATH = '/path/to/Datasets/YouTubeVOS/%s/JPEGImages/%s/%s.jpg'

__C.DATASETS.YOUTUBE_VOS.ANNOTATION_FILE_PATH = '/path/to/Datasets/YouTubeVOS/%s/Annotations/%s/%s.png'

__C.DATASETS.YOUTUBE_VOS.OPTICAL_FLOW_FILE_PATH = '/path/to/Datasets/YouTubeVOS/%s/OpticalFlows/%s/%s.flo'

__C.DATASETS.PASCAL_VOC = edict()

__C.DATASETS.PASCAL_VOC.INDEXING_FILE_PATH = '/path/to/Datasets/voc2012/trainval.txt'

__C.DATASETS.PASCAL_VOC.IMG_FILE_PATH = '/path/to/Datasets/voc2012/images/%s.jpg'

__C.DATASETS.PASCAL_VOC.ANNOTATION_FILE_PATH = '/path/to/Datasets/voc2012/masks/%s.png'

__C.DATASETS.ECSSD = edict()

__C.DATASETS.ECSSD.N_IMAGES = 1000

__C.DATASETS.ECSSD.IMG_FILE_PATH = '/path/to/Datasets/ecssd/images/%s.jpg'

__C.DATASETS.ECSSD.ANNOTATION_FILE_PATH = '/path/to/Datasets/ecssd/masks/%s.png'

__C.DATASETS.MSRA10K = edict()

__C.DATASETS.MSRA10K.INDEXING_FILE_PATH = './datasets/msra10k.txt'

__C.DATASETS.MSRA10K.IMG_FILE_PATH = '/path/to/Datasets/msra10k/images/%s.jpg'

__C.DATASETS.MSRA10K.ANNOTATION_FILE_PATH = '/path/to/Datasets/msra10k/masks/%s.png'

__C.DATASETS.MSCOCO = edict()

__C.DATASETS.MSCOCO.INDEXING_FILE_PATH = './datasets/mscoco.txt'

__C.DATASETS.MSCOCO.IMG_FILE_PATH = '/path/to/Datasets/coco2017/images/train2017/%s.jpg'

__C.DATASETS.MSCOCO.ANNOTATION_FILE_PATH = '/path/to/Datasets/coco2017/masks/train2017/%s.png'

__C.DATASETS.ADE20K = edict()

__C.DATASETS.ADE20K.INDEXING_FILE_PATH = './datasets/ade20k.txt'

__C.DATASETS.ADE20K.IMG_FILE_PATH = '/path/to/Datasets/ADE20K_2016_07_26/images/training/%s.jpg'

__C.DATASETS.ADE20K.ANNOTATION_FILE_PATH = '/path/to/Datasets/ADE20K_2016_07_26/images/training/%s_seg.png'

# Dataset Options: DAVIS, DAVIS_FRAMES, YOUTUBE_VOS, ECSSD, MSCOCO, PASCAL_VOC, MSRA10K, ADE20K

__C.DATASET.TRAIN_DATASET = ['ECSSD', 'PASCAL_VOC', 'MSRA10K', 'MSCOCO'] # Pretrain

__C.DATASET.TRAIN_DATASET = ['YOUTUBE_VOS', 'DAVISx5'] # Fine-tune

__C.DATASET.TEST_DATASET = 'DAVIS'

# Network Options: RMNet, TinyFlowNet

__C.TRAIN.NETWORK = 'RMNet'

To train RMNet, you can simply use the following command:

python3 runner.py

To test RMNet, you can use the following command:

python3 runner.py --test --weights=/path/to/pretrained/model.pth

This project is open sourced under MIT license.