Deep learned, hardware-accelerated 3D object pose estimation

Isaac ROS Pose Estimation

contains ROS 2 packages to predict the pose of an object.

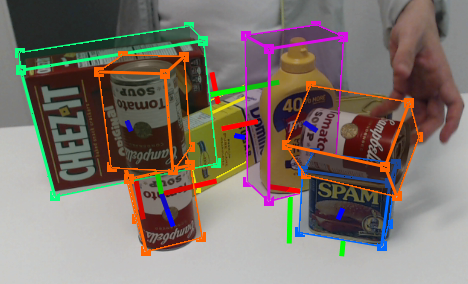

isaac_ros_dope estimates the object’s pose using the 3D

bounding cuboid dimensions of a known object in an input image.

isaac_ros_centerpose estimates the object’s pose using the 3D

bounding cuboid dimensions of unknown object instances in a known

category of objects from an input image. isaac_ros_dope and

isaac_ros_centerpose use GPU acceleration for DNN inference to

estimate the pose of an object. The output prediction can be used by

perception functions when fusing with the corresponding depth to provide

the 3D pose of an object and distance for navigation or manipulation.

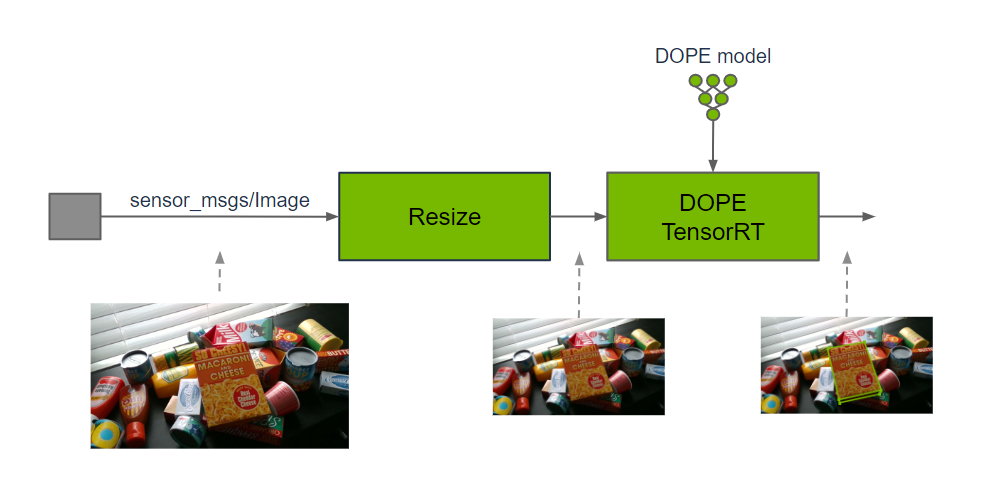

isaac_ros_dope is used in a graph of nodes to estimate the pose of a

known object with 3D bounding cuboid dimensions. To produce the

estimate, a DOPE (Deep

Object Pose Estimation) pre-trained model is required. Input images may

need to be cropped and resized to maintain the aspect ratio and match

the input resolution of DOPE. After DNN inference has produced an estimate, the

DNN decoder will use the specified object type, along with the belief

maps produced by model inference, to output object poses.

NVLabs has provided a DOPE pre-trained model using the

HOPE dataset. HOPE stands

for Household Objects for Pose Estimation. HOPE is a research-oriented

dataset that uses toy grocery objects and 3D textured meshes of the objects

for training on synthetic data. To use DOPE for other objects that are

relevant to your application, the model needs to be trained with another

dataset targeting these objects. For example, DOPE has been trained to

detect dollies for use with a mobile robot that navigates under, lifts,

and moves that type of dolly. To train your own DOPE model, please refer to the

Training your Own DOPE Model Tutorial.

isaac_ros_centerpose has similarities to isaac_ros_dope in that

both estimate an object pose; however, isaac_ros_centerpose provides

additional functionality. The

CenterPose DNN performs

object detection on the image, generates 2D keypoints for the object,

estimates the 6-DoF pose up to a scale, and regresses relative 3D bounding cuboid

dimensions. This is performed on a known object class without knowing

the instance-for example, a CenterPose model can detect a chair without having trained on

images of that specific chair.

Pose estimation is a compute-intensive task and therefore not performed at the frame rate of an input camera. To make efficient use of resources, object pose is estimated for a single frame and used as an input to navigation. Additional object pose estimates are computed to further refine navigation in progress at a lower frequency than the input rate of a typical camera.

Packages in this repository rely on accelerated DNN model inference

using Triton or

TensorRT from Isaac ROS DNN Inference.

For preprocessing, packages in this rely on the Isaac ROS DNN Image Encoder,

which can also be found at Isaac ROS DNN Inference.

| Sample Graph |

Input Size |

AGX Orin |

Orin NX |

Orin Nano 8GB |

x86_64 w/ RTX 4060 Ti |

|---|---|---|---|---|---|

| DOPE Pose Estimation Graph |

VGA |

39.8 fps 33 ms |

17.3 fps 120 ms |

– |

89.2 fps 15 ms |

| Centerpose Pose Estimation Graph |

VGA |

36.1 fps 5.7 ms |

19.4 fps 7.4 ms |

13.8 fps 12 ms |

50.2 fps 14 ms |

Please visit the Isaac ROS Documentation to learn how to use this repository.

Update 2023-10-18: Adding NITROS CenterPose decoder.